Updated: I've removed some of "ancillary changes" instructrions under wh- questions, because most of those were incorporated into the Matrix in the meantime. I've also added some information about using the variable property mapping to cut down on generator outputs.

As usual, check the write up instructions first.

Especially in the test corpus section, but also in general, it will

be helpful to keep notes along the way as you are doing grammar

development.

Requirements for this assignment

Before making any changes to your grammar for this lab, run a baseline test suite instance. If you decide to add items to your test suite for the material covered here, consider doing so before modifying your grammar so that your baseline can include those examples. (Alternatively, if you add examples in the course of working on your grammar and want to make the snapshot later, you can do so using the grammar you turned in for Lab 6.)

The negation library is more robust than in previous years, so we expect that in most cases the output is working or close to working.

The goal of this section is to parse one more sentence from your test corpus than you are before starting this section. In most cases, that will mean parsing one sentence total. In your write up, you should document what you had to add to get the sentence working. Note that it is possible to get full credit here even if the sentence ultimately doesn't parse by documenting what you still have to get working.

This is a very open-ended part of the lab (even more so than usual), which means: A) you should get started early and post to Canvas so I can assist in developing analyses of whatever additional phenomena you run accross and B) you'll have to restrain yourselves; the goal isn't to parse the whole test corpus this week ;-). In fact, I won't have time to support the extension of the grammars by more than one sentence each, so please *stop* after one sentence.

In constructing your testsuite for this phenomenon in a previous lab, you were asked to find the following:

In the following, I'll share the tdl I've developed in a small English grammar, for two possibilities:

Your goal for this part of the lab is to use this as a jumping-off point to handle wh questions as they manifest in your language. Of course, I expect languages to differ in the details, so please start early and post to Canvas so we can work it out together.

Type and entry definitions for my tdl pronouns (used in both versions):

wh-pronoun-noun-lex := norm-hook-lex-item & basic-icons-lex-item &

[ SYNSEM [ LOCAL [ CAT [ HEAD noun,

VAL [ SPR < >,

SUBJ < >,

COMPS < >,

SPEC < > ] ],

CONT [ HOOK.INDEX.PNG.PER 3rd,

RELS <! [ LBL #larg,

ARG0 #ind & ref-ind ],

[ PRED "wh_q_rel",

ARG0 #ind,

RSTR #harg ] !>,

HCONS <! [ HARG #harg,

LARG #larg ] !> ] ],

NON-LOCAL.QUE <! #ind !> ] ].

what := wh-pronoun-noun-lex &

[ STEM < "what" >,

SYNSEM.LKEYS.KEYREL.PRED "_thing_n_rel" ].

who := wh-pronoun-noun-lex &

[ STEM < "who" >,

SYNSEM.LKEYS.KEYREL.PRED "_person_n_rel" ].

In order to make sure the diff-list appends for the non-local features don't leak (leaving you with underspecified QUE or SLASH), there may be a few ancillary changes required. For example:

basic-head-filler-phrase :+

[ ARGS < [ SYNSEM.LOCAL.COORD - ], [ SYNSEM.LOCAL.COORD - ] > ].

wh-ques-phrase := basic-head-filler-phrase & interrogative-clause &

head-final &

[ SYNSEM.LOCAL.CAT [ MC bool,

VAL #val,

HEAD verb & [ FORM finite ] ],

HEAD-DTR.SYNSEM.LOCAL.CAT [ MC na,

VAL #val & [ SUBJ < >,

COMPS < > ] ],

NON-HEAD-DTR.SYNSEM.NON-LOCAL.QUE <! ref-ind !> ].

extracted-comp-phrase := basic-extracted-comp-phrase &

[ SYNSEM.LOCAL.CAT.HEAD verb,

HEAD-DTR.SYNSEM.LOCAL.CAT.VAL.SUBJ cons ].

extracted-subj-phrase := basic-extracted-subj-phrase &

[ SYNSEM.LOCAL.CAT.HEAD verb,

HEAD-DTR.SYNSEM.LOCAL.CAT.VAL.COMPS < > ].

Note that the constraints on SUBJ and COMPS in the two types just above are somewhat specific to English --- depending on the word order facts of your language, you may need to constrain them differently.

Note also that all the phrase structure rules require instances in rules.tdl. For example:

wh-ques := wh-ques-phrase.

Note that you do NOT want to create an instance of basic-head-filler-phrase directly. basic-head-filler-phrase is a supertype of wh-ques-phrase.

Note that all the phrase structure rules require instances in rules.tdl

wh-int-cl := clause & head-compositional & head-only &

[ SYNSEM [ LOCAL.CAT [ VAL #val,

MC bool ],

NON-LOCAL non-local-none ],

C-CONT [ RELS <! !>,

HCONS <! !>,

HOOK.INDEX.SF ques ],

HEAD-DTR.SYNSEM [ LOCAL.CAT [ HEAD verb & [ FORM finite ],

VAL #val &

[ SUBJ < >,

COMPS < > ] ],

NON-LOCAL [ SLASH <! !>,

REL <! !>,

QUE <! ref-ind !> ] ] ].

The general head-subj type assumes that QUE is empty, which won't fly in this case, so we need to redefine it. In the pseudo-English grammar, I did it this way:

eng-subj-head-phrase := head-valence-phrase & head-compositional &

basic-binary-headed-phrase &

[ SYNSEM phr-synsem &

[ LOCAL.CAT [ POSTHEAD +,

HC-LIGHT -,

VAL [ SUBJ < >,

COMPS #comps,

SPR #spr ] ] ],

C-CONT [ HOOK.INDEX.SF prop-or-ques,

RELS <! !>,

HCONS <! !>,

ICONS <! !> ],

HEAD-DTR.SYNSEM.LOCAL.CAT.VAL [ SUBJ < #synsem >,

COMPS #comps,

SPR #spr ],

NON-HEAD-DTR.SYNSEM #synsem & canonical-synsem &

[ LOCAL [ CAT [ VAL [ SUBJ olist,

COMPS olist,

SPR olist ] ] ],

NON-LOCAL [ SLASH 0-dlist & [ LIST < > ],

REL 0-dlist ] ]].

And then used this type in place of decl-subj-head-phrase in my definition of subj-head-phrase:

subj-head-phrase := eng-head-subj-phrase & head-final & [ HEAD-DTR.SYNSEM.LOCAL.CAT.VAL.COMPS < > ].

If your language has head-opt-subj, this will need to be rewritten similarly.

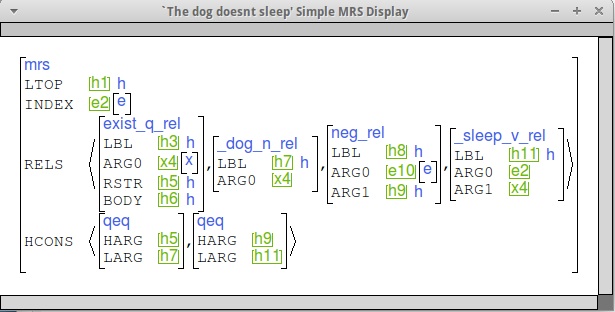

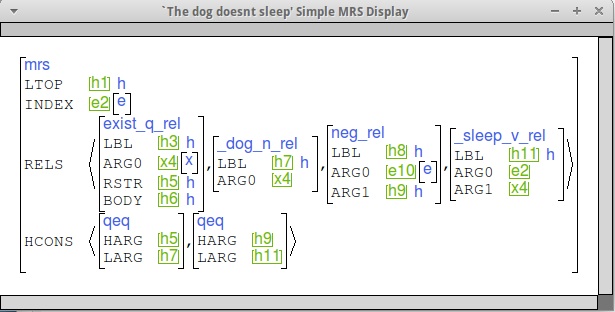

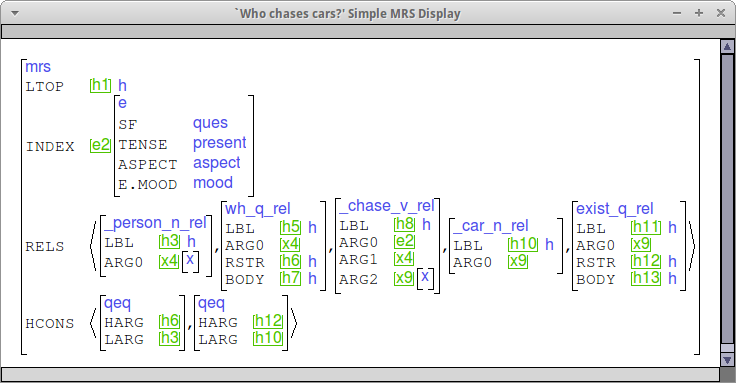

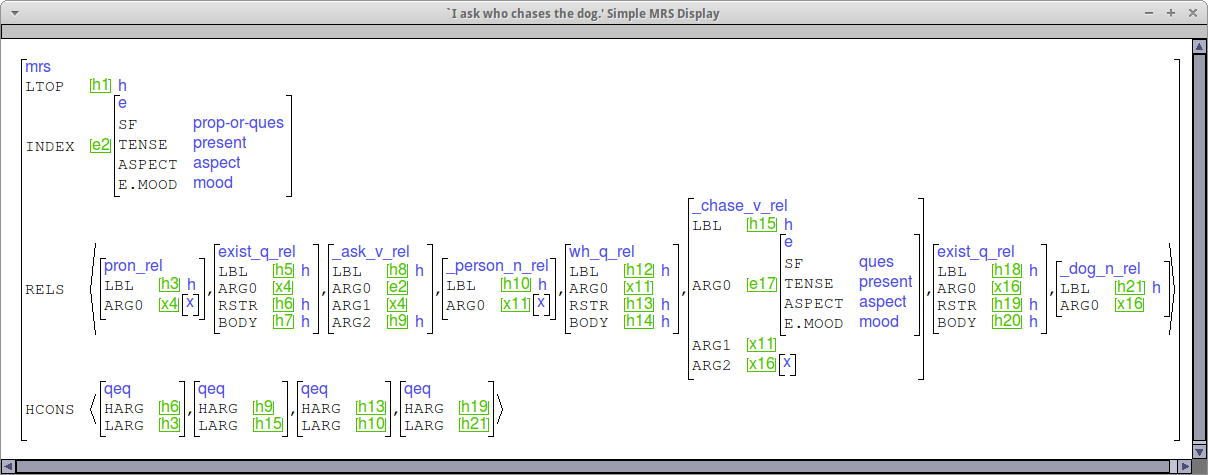

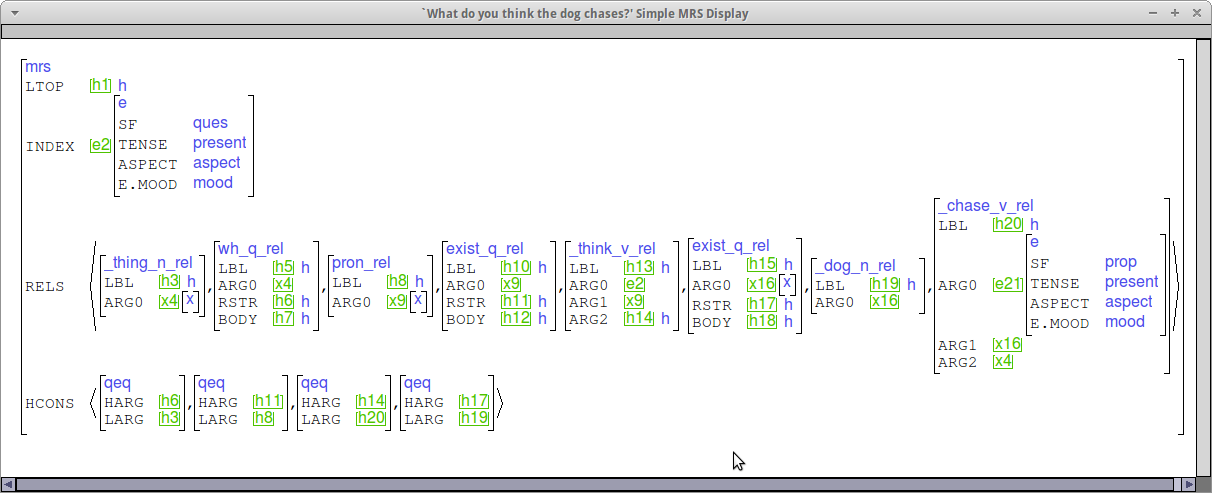

Below are some sample MRSs for wh questions, considering both subject and complement questions as well as matrix and embedded questions. Please use these as a point of comparison when you check your MRSs.

By this point in the quarter, it is common for the generator outputs to be frustratingly numerous. The generator allows us to see the full glory of the combinatorics of our grammars!

One place in which it can be convenient to cut that back somewhat is with underspecified variable properties. I'll illustrate here with aspect, though similar things can apply to other variable properties. If aspect is only optionally marked in your language, and you parse an item unmarked for aspect and then generate, you are probably seeing all of the aspect forms in the generator results. This can be averted by defining a type no-aspect that contrasts with your actual ASPECT values:

no-aspect := aspect.

... and then using the variable property mapping in semi.vpm to take underspecified aspect to no-aspect. For example:

E.ASPECT : ASPECT perfective <> perfective progressive <> progressive imperfective <> imperfective * >> no-aspect no-aspect << [e]

Aside from the last two lines (which you should add, if your grammar falls into this category), you should leave what's there as is (it's been customized to the aspect values that you entered).

(There is some documentation of the variable property mapping machinery here.)

For each of the following phenomena, please include the following your write up:

Phenomena:

In addition, your write up should include a statement of the current coverage of your grammar over your test suite (using numbers you can get from Analyze | Coverage and Analyze | Overgeneration in [incr tsdb()]) and a comparison between your baseline test suite run and your final one for this lab (see Compare | Competence).

svn export yourgrammar iso-lab7For git, please do the equivalent.

tar czf iso-lab7.tgz iso-lab7

tar czf lab7.tgz *