TAPESTRY: The Art of Representation and Abstraction

3D Data: Blobs

What's it all about?

The great majority of buildings have planar or piece-wise planar skins. However,

The great majority of buildings have planar or piece-wise planar skins. However,

- Some people would like to design buildings with curves, even though

- 3D models ALWAYS consist of (at some level) sets of flat polygons, and

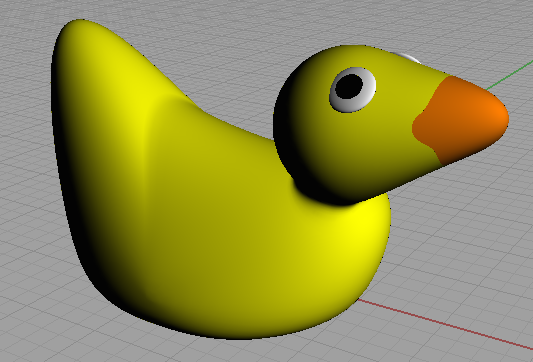

- Some shapes (like the rubber-ducky at right) are just plain curvy!

Simple cases: regular geometry

We've actually encountered this problem before, in drawing circles, cylinders, etc. The concept of a circle is quite simple: a center (x,y) and a radius r. Given these three numbers and knowledge about the geometry of a circle we can calculate the coordinates for any point on the circle. Computer displays usually draw straight lines, so they use this information to approximate circles as polygons for display purposes (because it's faster). The point is this:

The display representation of the circle can be constructed (computed) from the abstract data (stored) representation using an algorithm, and at any arbitrary level of detail.

Strategy 1: Meshes

The example of the circle, above, represents a fairly advanced way to approach the problem. There are a couple of other ways that you should also be aware of.

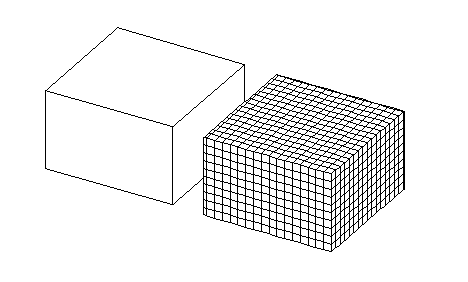

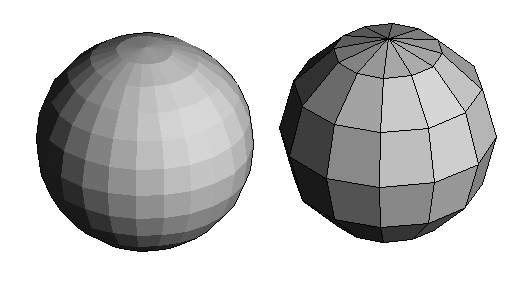

Brute Force

Starting with basic geometrical primitives (spheres, cylinders, etc.) we can make curvy collections of polygons. Then we can maniuplate the individual points in the meshes (a process sometimes called "vertex tweaking"), moving them around to achieve the final form. Even if we can select multiple points to move, this is a labor-intensive process.

Starting with basic geometrical primitives (spheres, cylinders, etc.) we can make curvy collections of polygons. Then we can maniuplate the individual points in the meshes (a process sometimes called "vertex tweaking"), moving them around to achieve the final form. Even if we can select multiple points to move, this is a labor-intensive process.

Systematic Edits

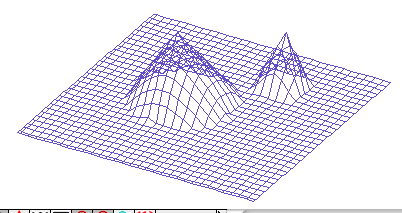

Lots of mesh editing applies nearly the same edit to adjacent points (imagine forming a face from a sphere by pushing and pulling points). Maybe there is some way to move individual points, but in groups, responding to some unified, systematic manipulation. We might take a cylinder and "bend" it, or a planar mesh and "push on it" with a shape to create changes.

Lots of mesh editing applies nearly the same edit to adjacent points (imagine forming a face from a sphere by pushing and pulling points). Maybe there is some way to move individual points, but in groups, responding to some unified, systematic manipulation. We might take a cylinder and "bend" it, or a planar mesh and "push on it" with a shape to create changes.

The trick here is to select the points to edit and define the editing action that is applied systematically to those points, in a way that works with many very different collections of points.

The trick here is to select the points to edit and define the editing action that is applied systematically to those points, in a way that works with many very different collections of points.

Rhino allows you to create mesh geometries and edit them through control point editing (vertex tweaking), cage editing (which performs edits on all points within a volume of space), and soft move operations, which perform certain systematic edits.

Strategy 2: Parametric Representations

This may sound hard, but it's not. Think back to the circle that can be turned into an arbitrarily complex polygon by simply doing some calculations. That is a parametric representation of a circle. It separates the visible geometry from the conceptual geometry. The only catch is that its a circle, and circles don't give us a lot of "representational range" (they're boring). What to do?

This may sound hard, but it's not. Think back to the circle that can be turned into an arbitrarily complex polygon by simply doing some calculations. That is a parametric representation of a circle. It separates the visible geometry from the conceptual geometry. The only catch is that its a circle, and circles don't give us a lot of "representational range" (they're boring). What to do?

Mathematicians have developed parametric definitions for a variety of 2D curves and 3D surfaces. These are often referred to as splines (for 2D) or NURBS (for 3D). "NURBS" actually stands for "non-uniform rational B-spline" (where B-spline means "Bezier or Basis Spline", named after one of those mathematicians).

The cool thing...

The display representation of the shape can be constructed (computed) from the control points (stored) representation using an algorithm, and at any arbitrary level of detail, just like for the circle, but the shape can be manipulated almost infinitely by moving the relatively small number of control points.

These ways of representing lines and surfaces have tremendous representational range. Everything from straight lines and flat planes, up to faces and kitchen tools can be represented, and turned into renderable geometry, 3D printing data, or CNC control code as needed. You need more than one NURB to do it, but the way in which these surfaces work mathematically means the positions, slopes, and curvature match at the edges, collectively producing a complete surface, as with the head at left.

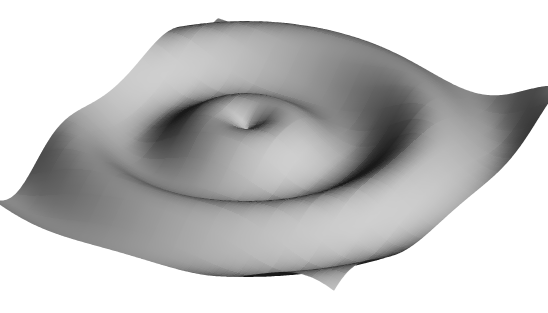

Strategy 3: Abstract Representations

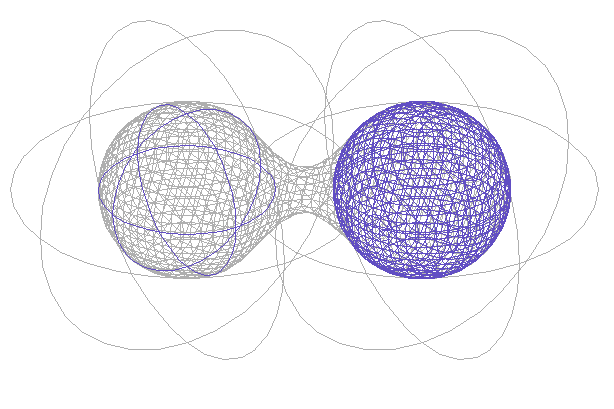

Related to parametric representations are various more abstract approaches. The one implemented in form-Z is called "Meta Balls" (Warning! your word-processor is likely to try and change that into "meat balls" ... don't let it!). Meta-balls operate on the same principle as isolux contours for lighting or isobar contours in weather maps--given a varying piece of information on a surface or in a volume of space, we can imagine surfaces of uniformity weaving through the space. Terrain contours are lines of constant elevation. Isolux contours are lines of constant illumination, and Isobar contours are lines of constant atmospheric pressure. Surfaces defined with Meta-balls are surfaces of constant value. They are formed by "evaluating" a set of objects (think light-bulbs) to establish their influence on the contour. So the model you make defines the sources of influence not the final geometry. The meta-ball algorithm computes the resulting surface. It's a bit like placing lights in a room--the light on your reading depends on all the lights in the space. Move any light around and the distribution changes.

Related to parametric representations are various more abstract approaches. The one implemented in form-Z is called "Meta Balls" (Warning! your word-processor is likely to try and change that into "meat balls" ... don't let it!). Meta-balls operate on the same principle as isolux contours for lighting or isobar contours in weather maps--given a varying piece of information on a surface or in a volume of space, we can imagine surfaces of uniformity weaving through the space. Terrain contours are lines of constant elevation. Isolux contours are lines of constant illumination, and Isobar contours are lines of constant atmospheric pressure. Surfaces defined with Meta-balls are surfaces of constant value. They are formed by "evaluating" a set of objects (think light-bulbs) to establish their influence on the contour. So the model you make defines the sources of influence not the final geometry. The meta-ball algorithm computes the resulting surface. It's a bit like placing lights in a room--the light on your reading depends on all the lights in the space. Move any light around and the distribution changes.

Last updated: November, 2015